Modernise infrastructure of this blog

When I created this blog 3 years ago, the main purpose was to establish a place to reflect learning and practice English. At that time, managing infrastructure as code looked daunting, so I recorded it as a tech debt. Then, this tech debt was never paid off until a random afternoon in the next 3 years. Last Sunday, the idea to manage infra of this blog as code stroke me again, and this time, I decided to delay no more.

Initially, I thought I would simply do some terraform import, and that’s it. Nice and easy. But after I started reviewing the resources I have and how they are put together, I started frowning my eyebrows. Eventually, I did not just import the resources as is. I ended up recreating some resources and changing the solution as well.

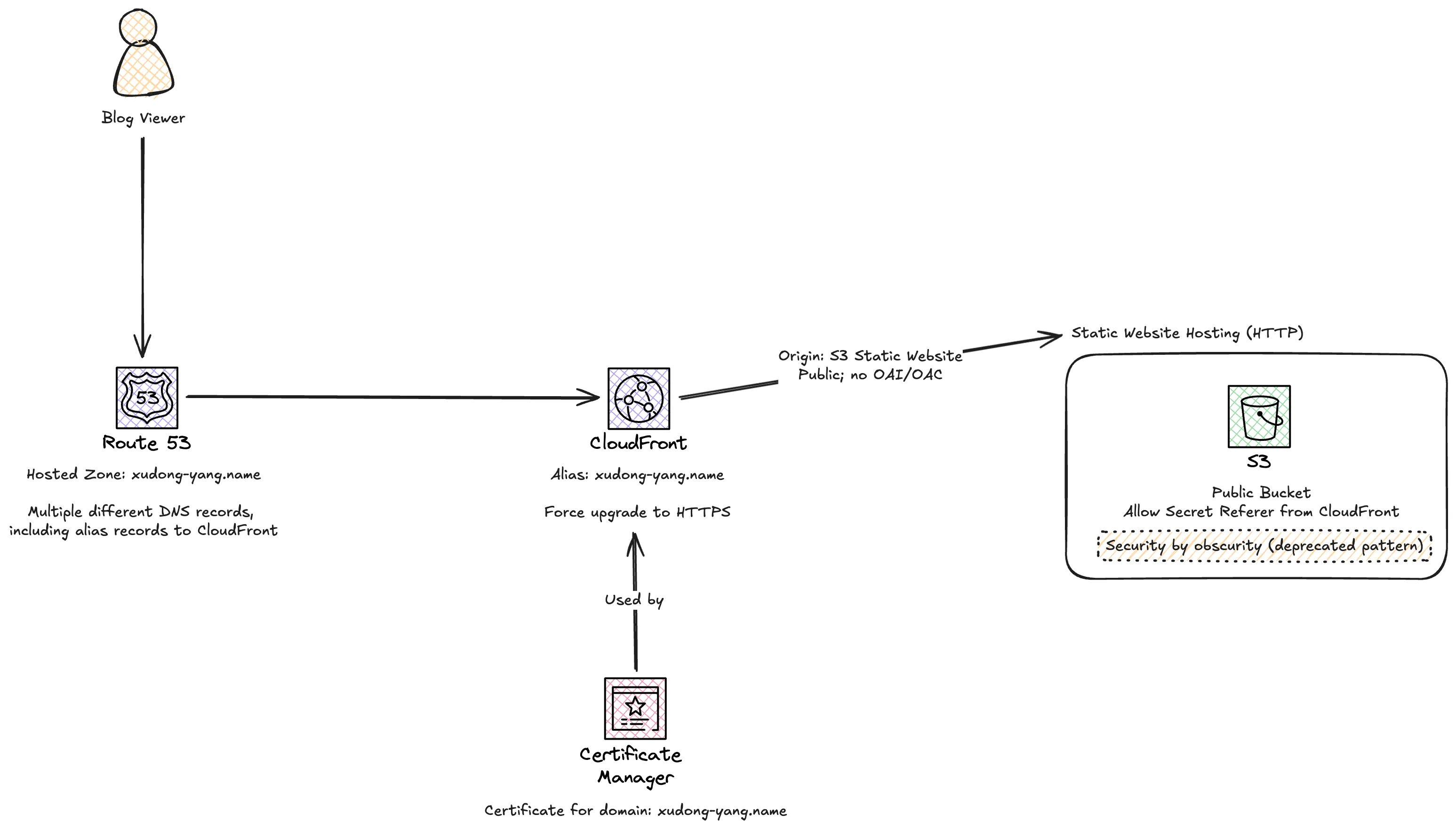

So this is my previous solution:

I remember the dilemma when I chose S3 static website hosting. To use this feature, the bucket must be public because the HTTP request from the internet doesn’t have an AWS identity. However, keeping a S3 bucket public is against AWS best practice. I had to ignore some warnings and committed my mind that I intend to do this and there is nothing wrong in this situation. To prevent people from accessing my S3 static website directly, I did put in a bucket policy to enforce a secret Referer HTTP header. The same Referer HTTP header was injected by CloudFront, so effectively only CloudFront could access the content of my S3 bucket. That Referer header served as a password to protect my S3 bucket from being arbitrarily accessed.

Maybe this solution was ok 3 or 4 years ago. Maybe not. I am certain that by then my AWS skills were not as advanced as today. Perhaps the better method existed, and I just didn’t realised. But things changed when I reviewed this solution again.

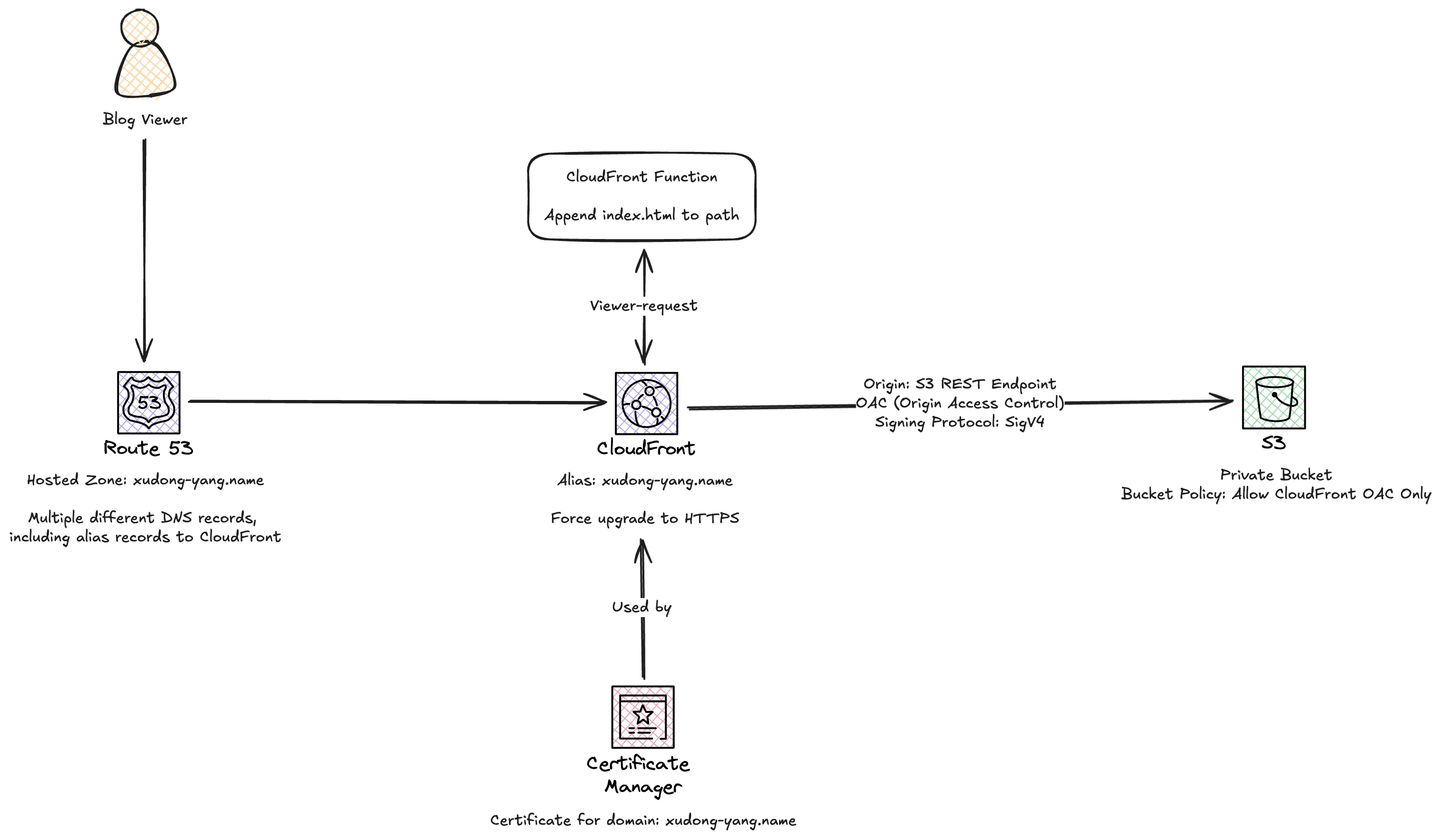

After some consideration and research, I eventually re-architected this blog like this:

Compared with the previous solution, here are the major difference:

- S3 bucket becomes private. All public access is blocked;

- Origin type of CloudFront distribution changes from S3 Static Website to S3 REST API (in other words, CloudFront now directly fetches S3 content, instead of the hosted website);

- Origin Access Control is implemented on the origin of CloudFront distribution (in other words, CloudFront signs its request to S3 bucket, and only CloudFront signed requests are allowed in S3 bucket policy);

- Since the static website hosting feature is turned off, and

hugobuilds each page as/page-uri/index.html, a CloudFront function is added for the viewer-request event. It appends/index.htmlif the uri doesn’t contain.and does not end with/, and if the uri ends with/, appendindex.html. By doing this, CloudFront knows the exact S3 file to load. This CloudFront function is essential, otherwise thehugotemplate must be changed to appendindex.htmlin the URL. I don’t want to changehugotemplate either because this change is cumbersome, and the address bar in the browser won’t look nice.

Now every infrastructure of this blog is managed by Terraform, except a S3 bucket for CloudFront distribution access logs. The access logs type that I have been using is now shown as S3 (Legacy), and there is a new S3 option, as well as CloudWatch Logs and Kinesis Data Firehose options. I did some research and found more rooms to improve. For example, my current access log format is W3C Extended Log File Format . However, Parquet seems to be a much better format in terms of storage size and query speed. I have made up my mind to move to the new S3 log delivery type + Parquet format, but that seems to be another big rabbit hole, so I left it undone for now. I just hardcoded the previous logging bucket name as the logging destination in the Terraform code. This little piece might become another contentful post in the future.